Prompt: Create a realistic photo of a black Labrador Retriever dog, same shape and colour as the reference image, surfing on a colourful cartoon-style surfboard on the ocean, with bright waves and a sunny beach in the background. (Using Adobe Firefly)

AI-generated content has evolved dramatically since its initial surge in 2022–2023. With the rise of multimodal foundation models like Gemini 3, GPT-5.1, Claude 3.7, Grok 3, and real-time video generation tools like Nano Banana Pro, Sora, organizations are now producing vast volumes of text, imagery, audio, code, and synthetic media at unprecedented speed.

As adoption grows, so do the ethical stakes. Questions around authorship, ownership, transparency, safety, accountability, copyright, and regulatory compliance have moved from academic debates to operational realities—especially in marketing, content creation, customer engagement, and enterprise automation.

This blog explains:

- What counts as AI-generated content in 2025

- The expanded ethical concerns (now influenced by global AI regulations)

- Real examples of generative tools today

- Best practices to maintain responsible and compliant AI use

What Is AI-Generated Content?

AI-generated content is any text, image, video, audio, code, or multimodal output created wholly or partially through artificial intelligence models; often via natural language prompts, voice commands, or automated workflows.

The AI tools now support:

- Real-time text + image + video generation

- Agentic workflows that autonomously research, summarize, design, and publish

- Synthetic voices and avatars nearly indistinguishable from real humans

- Enterprise integrations with CMSs, CDPs, DAMs, and CRM platforms

For an executive whose day job is not decoding technology acronyms, this gets overwhelming quickly.

Examples

1. Text — ChatGPT (GPT-5.1)

Prompt: Write an introduction to a blog discussing ethical AI use.

Output: A clear, contextual paragraph highlighting ethical risks, transparency, and the impact of generative AI on society.

2. Image — Nano Banana Pro/Midjourney v8 / DALL-E 4

Prompt: A photo of a teddy bear on a skateboard in Times Square.

Result: Hyper-realistic, dynamic, and high-resolution imagery suitable for production-grade marketing.

3. Video — Nano Banana Pro/ Runway Gen-3 Alpha / Synthesia 2025

Prompt: A 20-second explainer video in a professional office setting.

Result: A fully generated, voiced, and animated clip with customizable avatars and brand styling.

4. Other notable 2025 tools:

- Jasper 2025 (marketing content)

- Adobe Firefly 3 + AEM integrations

- HeyGen 2025 (high-fidelity video avatars)

- ElevenLabs (synthetic voices)

Trust Is the Real AI Bottleneck

If your content isn’t governed, it’s not ready. If it’s not ready, neither is your business

Why AI Content Keeps Growing

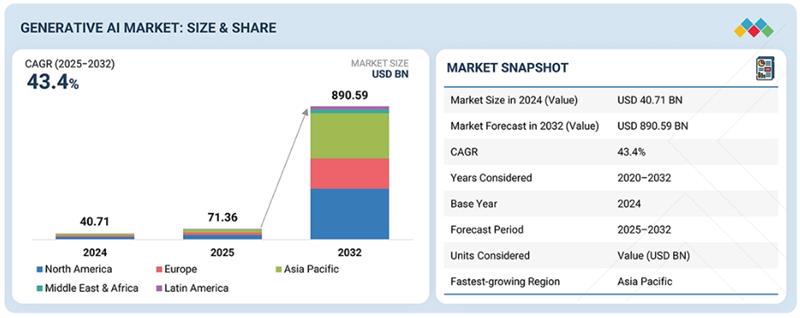

According to MarketsandMarkets (2025), the generative AI market is projected to grow from $71.36B in 2025 to $890.59B in 2032 (CAGR 43.4%). While this is more grounded than 2023’s optimistic trillion-dollar forecasts, growth remains rapid due to:

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

1. Advancements in Technology

- Massive multimodal models capable of reasoning, planning, and real-time interaction

- Faster training and fine-tuning on enterprise data

- Improved AI safety, watermarking, and governance features

2. Expansion of Use Cases

AI now powers:

- Marketing copy and campaign orchestration

- Personalized user journeys (e.g., Adobe AJO)

- Interactive synthetic videos

- Data analysis and operations automation

- VR/AR prototypes

- Code generation and DevOps support

3. Productivity & Accessibility

AI accelerates research, ideation, and content production. Voice-mode tools allow on-the-go prompt creation, making AI part of daily workflows.

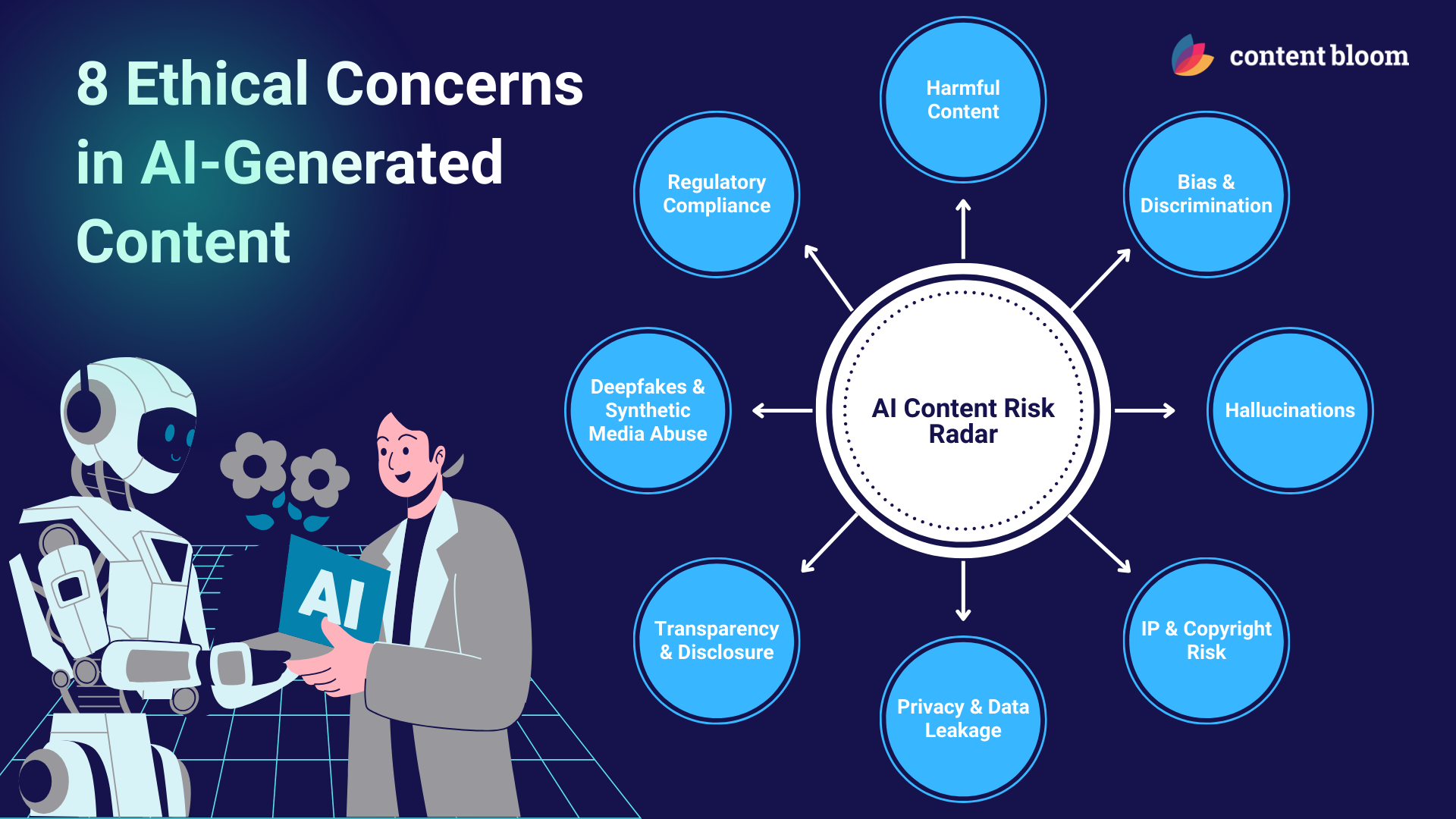

Ethical Concerns in AI-Generated Content

Ethical considerations have intensified as AI models become more capable and regulations more stringent. Key concerns include:

1. Harmful or Unsafe Content

Even with safety layers, models can still generate:

- Offensive language

- Misinformation

- Violence-adjacent or extremist content

- Manipulative or deceptive assets

Human review remains essential.

2. Embedded Bias and Discrimination

Bias persists due to:

- Training data skew

- Model generalization

- Reinforcement learning blind spots

Bias audits are now mandatory under several global regulations (EU AI Act, UK AI Safety Institute, Singapore AI Governance Framework).

3. Inaccuracy & Hallucination

AI hallucinations have decreased but are not eliminated. Enterprises must:

- Fact-check outputs

- Validate statistics

- Avoid over-reliance on AI-generated claims

4. Intellectual Property & Plagiarism

AI models sometimes produce:

- Near-verbatim outputs

- Style mimicry

- Derivative works

Ongoing legal cases against OpenAI, Stability AI, and Meta reflect unresolved questions around data licensing and copyright.

5. Privacy & Data Protection

Risks include:

- PII leakage through prompts

- Memorized data reproduction

- Improper training on sensitive information

Compliance with GDPR, CPRA, Canada’s AIDA, and India’s DPDP Act is now essential.

6. Transparency, Disclosure & Watermarking

Many jurisdictions now require:

- Clear labels on AI-generated media

- Watermarking or metadata embedding

- Disclosure when synthetic avatars or voices are used

Lack of disclosure may be treated as consumer deception.

7. Deepfakes & Synthetic Media Responsibility

With high-fidelity video generation, risks include:

- Fraud

- Election interference

- Identity misuse

- Reputational harm

Enterprises must establish verification and ethical-use controls.

8. Regulatory Compliance

EU AI Act

- Effective August 2024

- Prohibitions active February 2025

- Requires transparency, data governance, and risk assessments for generative systems

US (State-Level)

- California AI Safety Act (2025) addresses discrimination and content misuse

- No federal AI law yet

EU AI Act

- Japan: Fair training data guidelines

- Singapore: Model governance and watermarking

- India: DPDP Act enforcement around data usage

Ethical AI is no longer optional; it’s a compliance requirement.

Clarity Beats Speed in AI. Always

Rushed outputs cost more than slow ones. Govern your content before you scale it

Ethical AI is no longer optional; it’s a compliance requirement.

1. Define the Purpose Clearly

Avoid “open-ended generation.” Purpose-aligned prompts reduce harmful or irrelevant content.

2. Use Clear Instructions, Guardrails, and Constraints

Add:

- Tone guidelines

- Excluded topics

- Audience-specific boundaries

- Accuracy requirements

This dramatically reduces unsafe outputs.

3. Follow Global Guidelines & Organizational Policies

Align with:

- EU AI Act

- NIST AI Risk Management Framework

- ISO/IEC 42001 (AI Management Systems)

- Internal AI-use playbooks

4. Ensure Diversity in Input Data & Perspectives

Broader data reduces representational harms and stereotype reinforcement.

5. Monitor and Evaluate Outputs Continuously

Establish recurring audits for:

- Accuracy

- Bias

- Compliance

- Accessibility

- Inclusivity

6. Fact-Check with Subject Matter Experts

SMEs catch subtle inaccuracies that AI or general reviewers miss.

7. Strengthen Quality Control Processes

Include:

- Human-in-the-loop approval

- Plagiarism scans

- Watermark checks

- Regulatory compliance reviews

8. Maintain Transparency & Disclosure

Use labels such as:

- “Generated with AI”

- “Partially AI-assisted content”

- “Synthetic media”

This builds trust and meets legal requirements.

9. Store and Handle Data Responsibly

Avoid entering sensitive data unless your platform is approved for PII-safe use (e.g., enterprise GPT, private models).

Optimize Your AI-Content Strategy

Artificial intelligence will continue to shape how businesses create, personalize, and distribute content. While the productivity benefits are undeniable, the ethical and regulatory implications must be proactively addressed.

Content Bloom helps enterprises:

- Implement responsible AI frameworks

- Build ethical content workflows

- Select and adopt AI-powered CMS and DXP tools

- Align marketing strategies with global compliance standards

- Ensure governance, transparency, and quality in all AI-derived content

Let’s discuss how responsible, secure, and ethical AI can help your organization scale content production without compromising trust, compliance, or creativity.

FAQs

1. Do we need to disclose when content is AI-generated?

Yes. In 2025, several regulations (EU AI Act, Singapore Model Governance, various U.S. state laws) require clear disclosure when text, images, video, or voices are AI-generated. This includes labeling, watermarking, and transparency about synthetic avatars. Even where disclosure isn’t legally required, it’s now considered best practice because it protects consumer trust and reduces the risk of being seen as deceptive. Organizations that publish at scale should build disclosure into their workflows so teams don’t miss it during fast production cycles.

2. Who owns AI-generated content, and can it violate copyright?

Ownership of AI-created content is still evolving. Generative tools sometimes produce work that resembles copyrighted material or replicates patterns learned from training data. This is why many enterprises treat AI outputs like drafts—they’re reviewed, edited, and approved by humans before publishing. To stay safe, companies should use enterprise-grade tools, run plagiarism checks, avoid style mimicry that seems too close to an artist, and maintain clear documentation of how content was produced. This reduces copyright risk and keeps workflows aligned with compliance expectations.

3. How can we prevent AI content from being biased, harmful, or inaccurate?

Even advanced 2025 models still need guardrails. Bias can come from training data, hallucinations can occur during generation, and safety layers aren’t perfect. The most reliable approach is a combination of:

- Purpose-aligned prompts that define tone, exclusions, and accuracy requirements

- Human review for sensitive, regulated, or high-impact content

- Bias and quality audits are built into your content process

- SME validation for statistics, claims, and technical accuracy

- Ongoing monitoring as models are updated

Ethical AI isn’t a one-time setup, it’s a continuous governance effort that grows with your content operations.

[…] Using AI right means dealing with biases in content. If not handled, AI can discriminate against some groups17. We need ethical rules for AI, with feedback loops and regular checks to improve AI systems. Brendan Aw says mixing AI with human oversight keeps content real18. It’s key to know who owns the content, get consent for data, and be accountable with AI19. […]

[…] In contrast, AI-generated content depends on data-driven insights and algorithmic predictions. Human involvement remains essential for providing initial prompts or themes for the AI to develop […]

[…] Ethical Considerations in AI-Generated Content Creation […]

[…] Ethical Considerations in AI-Generated Content Creation […]

[…] generative AI is expected to grow to $1.3 trillion in the next 10 years, up from $40 billion in 202218. It’s important for creators to know the legal rules for AI-generated content. This includes […]

[…] The impact of AI in the field of education is complicated. If teachers start using AI in their classrooms, they’ll have to explain where their materials come from. While it’s normal for educators to use resources that exist outside the classroom, it’s expected that they’ll incorporate those resources into their pedagogical framework in a way that makes it clear to students what those resources are. This is particularly important when it comes to AI, in light of the ethical questions around authorship and ownership. […]

[…] You must check if AI content is original and use plagiarism tools. This ensures your work’s integrity. AI is getting better, but making sure it’s used ethically is key to avoid legal issues and keep writing standards high15. […]

[…] You must check if AI content is original and use plagiarism tools. This ensures your work’s integrity. AI is getting better, but making sure it’s used ethically is key to avoid legal issues and keep writing standards high15. […]

[…] in content creation poses both opportunities and challenges. As highlighted by many in the field, the risk of producing content that might inadvertently spread misinformation […]

[…] Moreover, the industry is urged to engage in continuous monitoring and evaluation of AI-generated content to ensure accuracy and address any ethical issues promptly. Consulting subject matter experts and incorporating multiple stages of review can help maintain the integrity and originality of the content produced, thus enhancing AI quality and upholding AI ethics.[46]. […]

[…] Ethical Considerations in Using AI for SEO […]

[…] Legal compliance with data protection laws also impacts how businesses handle user data and consent26. It’s important for organizations to focus on enhancing positive human interactions through […]

[…] AI increasingly takes center stage in social media content creation, ethical considerations must guide its use. While AI tools can streamline processes and enhance creativity, they raise […]

[…] Transparency in AI-generated content is essential for building public trust. Content creators should be open about their use of AI tools and implement robust governance frameworks. This includes clear disclosure of AI involvement in content creation and maintaining human oversight in the decision-making process. […]

[…] To solve these problems, we should know why we’re using AI and follow global rules. A recent article on AI use in content talks about the need for checks and […]

[…] To address these, Synthesia implements strict policies and technology to detect and block malicious use. The company requires users to verify their identities for projects involving custom avatars and strictly prohibits uploading unauthorized likenesses. Furthermore, projects are reviewed to ensure compliance with ethical guidelines. Learn more about the larger ethical issue with AI-generated content in this article. […]

[…] Ethical Considerations in AI-Generated Content Creation […]

[…] bias; AI can perpetuate stereotypes from its training data, making diverse perspectives essential. AI-generated content can inadvertently reflect and amplify these biases, emphasizing the importance of diverse data […]

[…] is important. AI has the ability to generate large volumes of content, which could lead to unintentional plagiarism or false information. Authenticity in AI-generated content requires clear identification of AI […]

[…] report by Content Bloom says we need to know AI’s limits and biases. By using different data and updating AI, we can […]

[…] Define a purpose for the content to align with organizational goals. […]

[…] AI, follow strict ethical rules. Companies should have clear rules for AI content. This ensures AI tools respect data privacy and keep the brand’s voice real. This builds trust with your online […]

[…] to the fore ethical considerations around digital content creation. Ethical considerations in AI-generated content are now critical as technology […]

[…] ensure that responsible behavior and decision-making are the rule, rather than the exception. The Best AI Tools 2024 are not a […]

[…] Deepfakes and Disinformation: Anyone can create high-quality, realistic footage that could mislead audiences, whether it’s spoofing real people or faking events. With Veo 3 making cinematic realism more accessible, the chance for bad actors to spread falsehoods also increases. This brings a heavier responsibility to creators, platforms, and anyone sharing this content. Read about both the potential dangers and how moderation steps in Ethical Considerations in AI-Generated Content Creation. […]

[…] [21] Ethical Considerations in AI-Generated Content Creation. (n.d.) retrieved May 11, 2025, from contentbloom.com […]

[…] and AI developers, who argue for a more expansive interpretation of fair use in the digital age. Ethical concerns with AI content creation need to be […]

[…] Ethical Considerations in AI-Generated Content Creation. (n.d.) retrieved May 11, 2025, from contentbloom.com[22] When AI Gets It Wrong: Addressing AI Hallucinations and Bias. (n.d.) retrieved May 11, 2025, […]

[…] Content Bloom recommends multiple review stages to catch problems before they reach your audience. Here’s a bias-checking workflow that actually works: […]

[…] Content Authenticity: Clear labeling of AI-generated content maintains transparency […]

[…] Another key concern is the potential for AI to reinforce societal biases present in training data, leading to prejudiced or inappropriate content. Bias mitigation requires careful prompt engineering and implementing guardrails within AI tools (source). […]

[…] https://contentbloom.com/blog/ethical-considerations-in-ai-generated-content-creation/ […]

[…] [21] Этические аспекты создания контента с помощью искусственного интеллекта . (nd) Получено 11 мая 2025 г. с сайта contentbloom.com. […]

[…] Ethical Considerations in AI-Generated Content Creation […]

[…] Ethical Considerations in AI-Generated Content Creation […]

[…] Se a IA usa dados pessoais ou contextuais sem consentimento, violações de privacidade podem ocorrer. (Content bloom) […]

[…] To make sure you use AI tools safely and ethically, follow these best practices: […]